Table of Contents

- Workshop Schedule

- Overview

- Dates

- New Rules and Awards

- COCO Challenges

- Mapillary Challenges

- Guest Competition: The First LVIS Challenge

- Invited Speaker

Location: 301

Workshop Schedule - 27.10.2019

| 9:00 | Opening Remark | Tsung-Yi Lin |

|---|---|---|

| 9:10 | Detection Intro Talk | Yin Cui |

| 9:20 | COCO Detection Talks | |

| 9:50 | Panoptic Intro Talk | Yin Cui |

| 10:00 | COCO Panoptic Talks | |

| 10:30 | Coffee | |

| 11:00 | Keypoints Challenge Intro Talk | Tsung-Yi Lin |

| 11:10 | COCO Keypoints Talks | |

| 11:45 | DensePose Challenge Intro Talk | Natalia Neverova |

| 11:55 | COCO DensePose Talks | |

| 12:05 | Lunch | |

| 1:30 | Invited Talk: "Detection and Friends" | Alex Berg |

| 2:10 | Mapillary Intro Talk | Peter Kontschieder |

| 2:20 | Mapillary Talks | |

| 2:55 | Invited Talk: "Bridging the Sim-to-Real gap in Computer Vision benchmarks" | Andrej Karpathy |

| 3:35 | Coffee | |

| 4:05 | LVIS Challenge Intro Talk | Ross Girshick |

| 4:25 | LVIS Talks |

2. Overview

The goal of the joint COCO and Mapillary Workshop is to study object recognition in the context of scene understanding. While both the COCO and Mapillary challenges look at the general problem of visual recognition, the underlying datasets and the specific tasks in the challenges probe different aspects of the problem.

COCO is a widely used visual recognition dataset, designed to spur object detection research with a focus on full scene understanding. In particular: detecting non-iconic views of objects, localizing objects in images with pixel level precision, and detection in complex scenes. Mapillary Vistas is a new street-level image dataset with emphasis on high-level, semantic image understanding, with applications for autonomous vehicles and robot navigation. The dataset features locations from all around the world and is diverse in terms of weather and illumination conditions, capturing sensor characteristics, etc.

Mapillary Vistas is complementary to COCO in terms of dataset focus and can be readily used for studying various recognition tasks in a visually distinct domain from COCO. COCO focuses on recognition in natural scenes, while Mapillary focuses on recognition of street-view scenes. We encourage teams to participate in challenges across both datasets to better understand the current landscape of datasets and methods.

Challenge tasks: COCO helped popularize instance segmentation and this year both COCO and Mapillary feature this task, where the goal is to simultaneously detect and segment each object instance. As detection has matured over the years, COCO is no longer featuring the bounding-box detection task. While the leaderboard will remain open, the bounding-box detection task is not a workshop challenge; instead we encourage researchers to focus on the more challenging and visually informative instance segmentation task or to tackle low-shot object detection in the LVIS Challenge. As in previous years, COCO features the popular person keypoint challenge track. In addition, COCO features a DensePose track for mapping all human pixels to a 3D surface of the human body for the second time.

This year we feature panoptic segmentation task for the second time. Panoptic segmentation addresses both stuff and thing classes, unifying the typically distinct semantic and instance segmentation tasks. The definition of panoptic is “including everything visible in one view”, in this context panoptic refers to a unified, global view of segmentation. The aim is to generate coherent scene segmentations that are rich and complete, an important step toward real-world vision systems. For more details about the panoptic task, including evaluation metrics, please see this paper. Both COCO and Mapillary will feature panoptic segmentation challenges.

This workshop offers the opportunity to benchmark computer vision algorithms on the COCO and Mapillary Vistas datasets. The instance and panoptic segmentation tasks on the two datasets are the same, and we use unified data formats and evaluation criteria for both. We hope that jointly studying the unified tasks across two distinct visual domains will provide a highly comprehensive evaluation suite for modern visual recognition and segmentation algorithms and yield new insights.

3. Challenge Dates

4. New Rules and Awards

- Participants must submit a technical report that includes a detailed ablation study of their submission (suggested length 1-4 pages). The reports will be made public. Please, use this latex template for the report and send it to coco.iccv19@gmail.com. This report will substitute the short text description that we requested previously. Only submissions with the report will be considered for any award and will be put in the COCO leaderboard.

- This year for each challenge track we will have two different awards: best result award and most innovative award. The most innovative award will be based on the method description in the submitted technical reports and decided by the COCO award committee. The commitee will invite teams to present at the workshop based on the innovations of the submissions rather than the best scores.

- This year we introduce single best paper award for the most innovative and successful solution across all challenges. The winner will be determined by the workshop organization committee.

5. COCO Challenges

COCO is an image dataset designed to spur object detection research with a focus on detecting objects in context. The annotations include instance segmentations for object belonging to 80 categories, stuff segmentations for 91 categories, keypoint annotations for person instances, and five image captions per image. The specific tracks in the COCO 2018 Challenges are (1) object detection with segmentation masks (instance segmentation), (2) panoptic segmentation, (3) person keypoint estimation, and (4) DensePose. We describe each next. Note: neither object detection with bounding-box outputs nor stuff segmentation will be featured at the COCO 2019 challenge (but evaluation servers for both tasks remain open).

5.1. COCO Object Detection Task

The COCO Object Detection Task is designed to push the state of the art in object detection forward. Note: only the detection task with object segmentation output (that is, instance segmentation) will be featured at the COCO 2019 challenge. For full details of this task please see the COCO Object Detection Task.

5.2. COCO Panoptic Segmentation Task

The COCO Panoptic Segmentation Task has the goal of advancing the state of the art in scene segmentation. Panoptic segmentation addresses both stuff and thing classes, unifying the typically distinct semantic and instance segmentation tasks. For full details of this task please see the COCO Panoptic Segmentation Task.

5.3. COCO Keypoint Detection Task

The COCO Keypoint Detection Task requires localization of person keypoints in challenging, uncontrolled conditions. The keypoint task involves simultaneously detecting people and localizing their keypoints (person locations are not given at test time). For full details of this task please see the COCO Keypoint Detection Task.

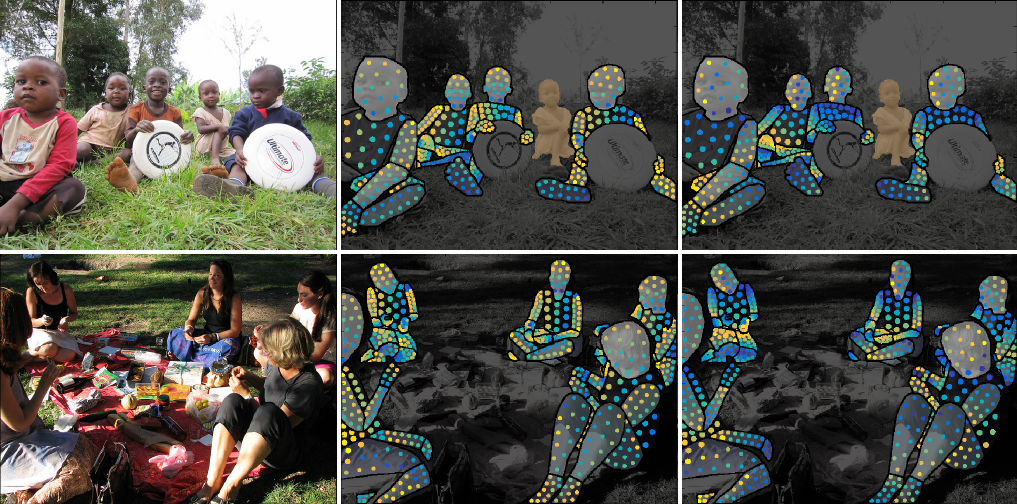

5.4. COCO DensePose Task

The COCO DensePose Task requires localization of dense person keypoints in challenging, uncontrolled conditions. The DensePose task involves simultaneously detecting people and localizing their dense keypoints, mapping all human pixels to a 3D surface of the human body. For full details of this task please see the COCO DensePose Task.

6. Mapillary Challenges

This year, for the second time, Mapillary Research is joining the popular COCO recognition tasks with the Mapillary Vistas dataset. Vistas is a diverse, pixel-accurate street-level image dataset for empowering autonomous mobility and transport at global scale. It has been designed and collected to cover diversity in appearance, richness of annotation detail, and geographic extent. The Mapillary challenges are based on the publicly available Vistas Research dataset, featuring:

- 28 stuff classes, 37 thing classes (w instance-specific annotations), and 1 void class

- 25K high-resolution images (18K train, 2K val, 5K test; w average resolution of ~9 megapixels)

- Global geographic coverage including North and South America, Europe, Africa, Asia, and Oceania

- Highly variable weather conditions (sun, rain, snow, fog, haze) and capture times (dawn, daylight, dusk, night)

- Broad range of camera sensors, varying focal length, image aspect ratios, and different types of camera noise

- Different capturing viewpoints (road, sidewalks, off-road)

6.1. Mapillary Vistas Object Detection Task

The Mapillary Vistas Object Detection Task emphasizes recognizing individual instances of both static street-image objects (like street lights, signs, poles) but also dynamic street participants (like cars, pedestrians, cyclists). This task aims to push the state-of-the-art in instance segmentation, targeting critical perception tasks for autonomously acting agents like cars or transportation robots. For full details of this task please see the Mapillary Vistas Object Detection Task.

6.2. Mapillary Vistas Panoptic Segmentation Task

The Mapillary Vistas Panoptic Segmentation Task targets the full perception stack for scene segmentation in street-images. Panoptic segmentation addresses both stuff and thing classes, unifying the typically distinct semantic and instance segmentation tasks. For full details of this task please see the Mapillary Vistas Panoptic Segmentation Task.

7. Guest Competition: The First LVIS Challenge

LVIS is a new, large-scale instance segmentation dataset that features > 1000 object categories, many of which have very few training examples. LVIS presents a novel low-shot object detection challenge to encourage new research in object detection. The COCO Workshop is happy to host the inaugural LVIS Challenge! For more information, please see LVIS challenge page.

8. Invited Speaker

Alex Berg

Facebook & UNC Chapel Hill

I am a research scientist at Facebook. My research examines a wide range of computational visual recognition, connections to natural language processing, psychology, and has a concentration on computational efficiency. I completed my PhD in computer science at UC Berkeley in 2005, and have worked alongside many wonderful people at Yahoo! Research, Columbia University, Stony Brook University, and am currently an associate professor (on leave) at UNC Chapel Hill.

Andrej Karpathy

Tesla

I am the Sr. Director of AI at Tesla, where I lead the team responsible for all neural networks on the Autopilot. Previously, I was a Research Scientist at OpenAI working on Deep Learning in Computer Vision, Generative Modeling and Reinforcement Learning. I received my PhD from Stanford, where I worked with Fei-Fei Li on Convolutional/Recurrent Neural Network architectures and their applications in Computer Vision, Natural Language Processing and their intersection. Over the course of my PhD I squeezed in two internships at Google where I worked on large-scale feature learning over YouTube videos, and in 2015 I interned at DeepMind on the Deep Reinforcement Learning team. Together with Fei-Fei, I designed and was the primary instructor for a new Stanford class on Convolutional Neural Networks for Visual Recognition (CS231n). The class was the first Deep Learning course offering at Stanford and has grown from 150 enrolled in 2015 to 330 students in 2016, and 750 students in 2017.