Table of Contents

Workshop Schedule - 09.09.2018

Note: Links to talk slides are available for select talks. For talks without slides, we suggest you contact the authors directly.

| 9:00 | Opening Comments + Detection Intro [Talk] | Ross Girshick |

|---|---|---|

| 9:15 | Winner [4x]: COCO Detection [Talk], COCO Keypoints [Talk], COCO Panoptic + Mapillary Panoptic [Talk] | Team Megvii (Face++) |

| 9:45 | Detection Winner [Talk] | Team MMDet |

| 10:00 | Keypoints Challenge Intro [Talk] | Matteo Ronchi |

| 10:15 | Keypoints Runner-Up [Talk] | Team MSRA |

| 10:30 | Coffee + Posters | |

| 11:00 | Panoptic Challenge Intro [Talk] | Alex Kirillov |

| 11:30 | Panoptic Runner-Up [Talk] | Team Caribbean |

| 11:45 | Panoptic Runner-Up [Talk] | Team PKU_360 |

| 12:00 | Lunch | |

| 1:30 | Talk: How to Satisfy the Thirst for Data? [Talk] | Andreas Geiger |

| 2:00 | DensePose Challenge Intro [Talk] | Riza Alp Guler |

| 2:15 | DensePose Winner [Talk] | Team BUPT-PRIV |

| 2:30 | DensePose Spotlights [Talk1, Talk2] | Teams: PlumSix, Sound of Silent |

| 2:45 | Mapillary Challenge Intro [Talk] | Peter Kontschieder |

| 3:00 | Mapillary Detection Winner [Talk] | Team DiDi Map Vision |

| 3:15 | Mapillary Panoptic Runner-Up [Talk] | Team TRI-ML |

| 3:30 | Coffee + Posters |

1. Overview

The goal of the joint COCO and Mapillary Workshop is to study object recognition in the context of scene understanding. While both the COCO and Mapillary challenges look at the general problem of visual recognition, the underlying datasets and the specific tasks in the challenges probe different aspects of the problem.

COCO is a widely used visual recognition dataset, designed to spur object detection research with a focus on full scene understanding. In particular: detecting non-iconic views of objects, localizing objects in images with pixel level precision, and detection in complex scenes. Mapillary Vistas is a new street-level image dataset with emphasis on high-level, semantic image understanding, with applications for autonomous vehicles and robot navigation. The dataset features locations from all around the world and is diverse in terms of weather and illumination conditions, capturing sensor characteristics, etc.

Mapillary Vistas is complementary to COCO in terms of dataset focus and can be readily used for studying various recognition tasks in a visually distinct domain from COCO. COCO focuses on recognition in natural scenes, while Mapillary focuses on recognition of street-view scenes. We encourage teams to participate in challenges across both datasets to better understand the current landscape of datasets and methods.

Challenge tasks: COCO helped popularize instance segmentation and this year both COCO and Mapillary feature this task, where the goal is to simultaneously detect and segment each object instance. As detection has matured over the years, COCO is no longer featuring the bounding-box detection task. While the leaderboard will remain open, the bounding-box detection task is not a workshop challenge; instead we encourage researchers to focus on the more challenging and visually informative instance segmentation task. As in previous years, COCO features the popular person keypoint challenge track. In addition, this year COCO will feature a DensePose track for mapping all human pixels to a 3D surface of the human body.

This year we are pleased to introduce the panoptic segmentation task with the goal of advancing the state of the art in scene segmentation. Panoptic segmentation addresses both stuff and thing classes, unifying the typically distinct semantic and instance segmentation tasks. The definition of panoptic is “including everything visible in one view”, in this context panoptic refers to a unified, global view of segmentation. The aim is to generate coherent scene segmentations that are rich and complete, an important step toward real-world vision systems. For more details about the panoptic task, including evaluation metrics, please see this paper. Both COCO and Mapillary will feature panoptic segmentation challenges.

This workshop offers the opportunity to benchmark computer vision algorithms on the COCO and Mapillary Vistas datasets. The instance and panoptic segmentation tasks on the two datasets are the same, and we use unified data formats and evaluation criteria for both. We hope that jointly studying the unified tasks across two distinct visual domains will provide a highly comprehensive evaluation suite for modern visual recognition and segmentation algorithms and yield new insights.

2. Challenge Dates

3. COCO Challenges

COCO is an image dataset designed to spur object detection research with a focus on detecting objects in context. The annotations include instance segmentations for object belonging to 80 categories, stuff segmentations for 91 categories, keypoint annotations for person instances, and five image captions per image. The specific tracks in the COCO 2018 Challenges are (1) object detection with segmentation masks (instance segmentation), (2) panoptic segmentation, (3) person keypoint estimation, and (4) DensePose. We describe each next. Note: neither object detection with bounding-box outputs nor stuff segmentation will be featured at the COCO 2018 challenge (but evaluation servers for both tasks remain open).

3.1. COCO Object Detection Task

The COCO Object Detection Task is designed to push the state of the art in object detection forward. Note: only the detection task with object segmentation output (that is, instance segmentation) will be featured at the COCO 2018 challenge. For full details of this task please see the COCO Object Detection Task.

3.2. COCO Panoptic Segmentation Task

The COCO Panoptic Segmentation Task has the goal of advancing the state of the art in scene segmentation. Panoptic segmentation addresses both stuff and thing classes, unifying the typically distinct semantic and instance segmentation tasks. For full details of this task please see the COCO Panoptic Segmentation Task.

3.3. COCO Keypoint Detection Task

The COCO Keypoint Detection Task requires localization of person keypoints in challenging, uncontrolled conditions. The keypoint task involves simultaneously detecting people and localizing their keypoints (person locations are not given at test time). For full details of this task please see the COCO Keypoint Detection Task.

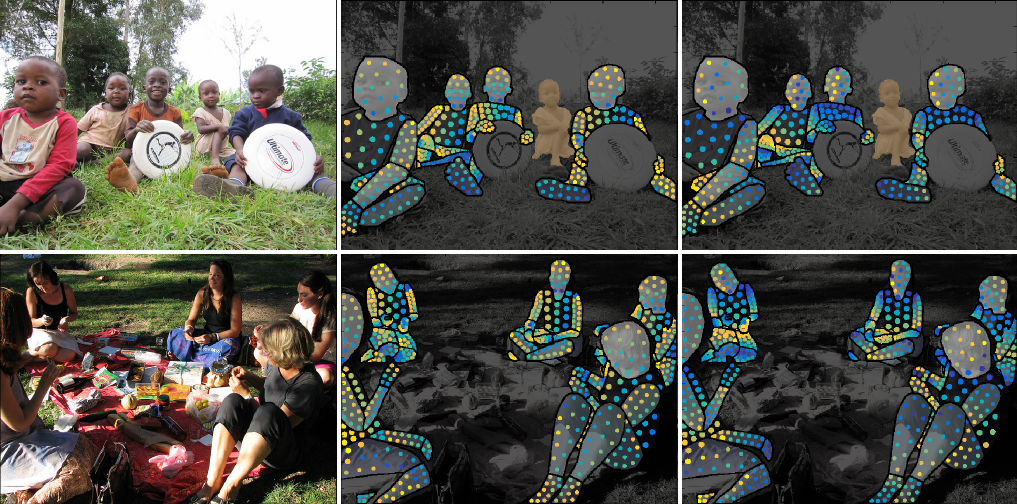

3.4. COCO DensePose Task

The COCO DensePose Task requires localization of dense person keypoints in challenging, uncontrolled conditions. The DensePose task involves simultaneously detecting people and localizing their dense keypoints, mapping all human pixels to a 3D surface of the human body. For full details of this task please see the COCO DensePose Task.

4. Mapillary Challenges

This year, Mapillary Research is joining the popular COCO recognition tasks with the Mapillary Vistas dataset. Vistas is a diverse, pixel-accurate street-level image dataset for empowering autonomous mobility and transport at global scale. It has been designed and collected to cover diversity in appearance, richness of annotation detail, and geographic extent. The Mapillary challenges are based on the publicly available Vistas Research dataset, featuring:

- 28 stuff classes, 37 thing classes (w instance-specific annotations), and 1 void class

- 25K high-resolution images (18K train, 2K val, 5K test; w average resolution of ~9 megapixels)

- Global geographic coverage including North and South America, Europe, Africa, Asia, and Oceania

- Highly variable weather conditions (sun, rain, snow, fog, haze) and capture times (dawn, daylight, dusk, night)

- Broad range of camera sensors, varying focal length, image aspect ratios, and different types of camera noise

- Different capturing viewpoints (road, sidewalks, off-road)

4.1. Mapillary Vistas Object Detection Task

The Mapillary Vistas Object Detection Task emphasizes recognizing individual instances of both static street-image objects (like street lights, signs, poles) but also dynamic street participants (like cars, pedestrians, cyclists). This task aims to push the state-of-the-art in instance segmentation, targeting critical perception tasks for autonomously acting agents like cars or transportation robots. For full details of this task please see the Mapillary Vistas Object Detection Task.

4.2. Mapillary Vistas Panoptic Segmentation Task

The Mapillary Vistas Panoptic Segmentation Task targets the full perception stack for scene segmentation in street-images. Panoptic segmentation addresses both stuff and thing classes, unifying the typically distinct semantic and instance segmentation tasks. For full details of this task please see the Mapillary Vistas Panoptic Segmentation Task.

5. Invited Speaker

Andreas Geiger

MPI-IS and University of Tübingen

Andreas Geiger is a full professor at the University of Tübingen and a group leader at the Max Planck Institute for Intelligent Systems. Prior to this, he was a visiting professor at ETH Zürich and a research scientist in the Perceiving Systems department of Dr. Michael Black at the MPI-IS. He received his PhD degree in 2013 from the Karlsruhe Institute of Technology. His research interests are at the intersection of 3D reconstruction, motion estimation and visual scene understanding. His work has been recognized with several prizes, including the 3DV best paper award, the CVPR best paper runner up award, the Heinz Maier Leibnitz Prize and the German Pattern Recognition Award. He serves as an area chair and associate editor for several computer vision conferences and journals (CVPR, ICCV, ECCV, PAMI, IJCV).